Nowadays, robust and accurate odometries, as the foundation technology of navigation systems, gains significance in autonomous driving and robotic navigation fields. Although odometries, especially visual odometries (VOs), have made substantial progress, their application scenarios are still limited by the normal cameras’ frame rate limitations and their low robustness to motion blur. The event camera, a recently proposed bionic sensor, seeks to tackle these challenges, offering new possibilities for VO solutions to overcome extreme environments. However, integrating event cameras into VO faces challenges like the RGB-event modality gap and the requirement for efficient event processing. To address these research gaps to some extent, we propose a novel visual-event odometry, namely MC-VEO (Motion Compensated Visual-Event Odometry).

Overall Framwork

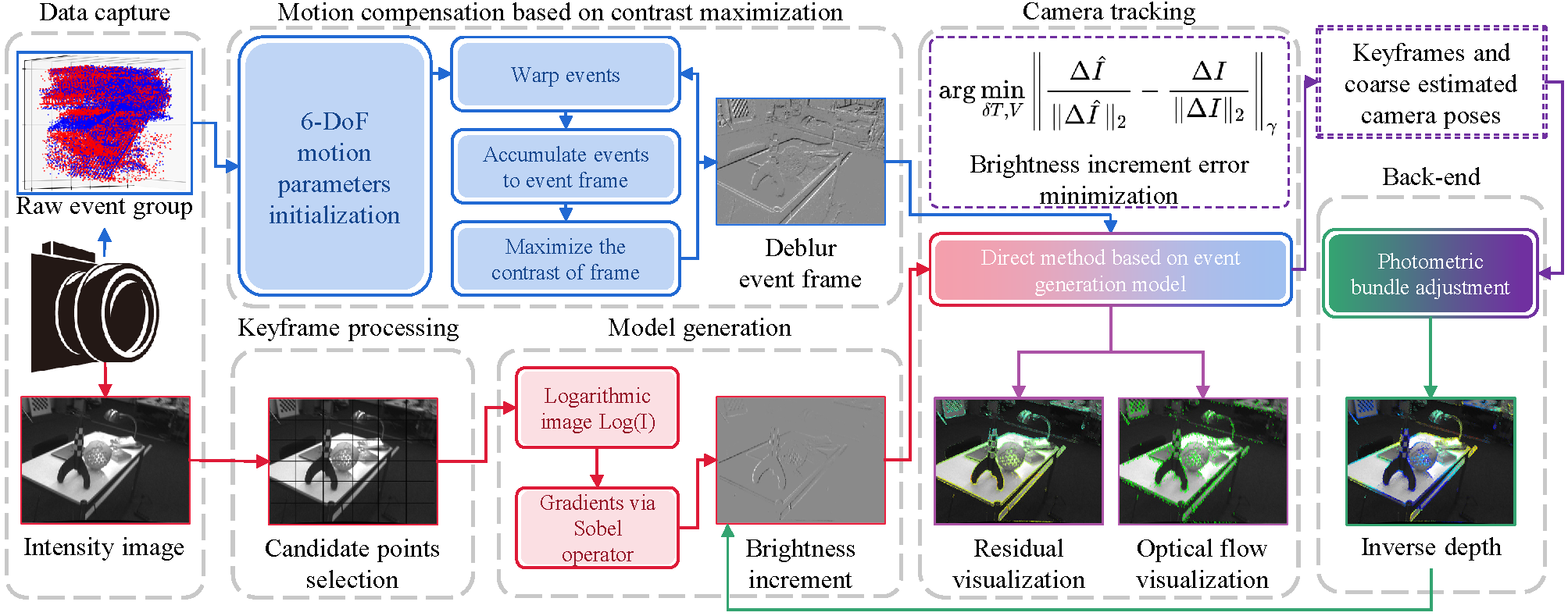

The overall pipeline of our proposed MC-VEO is shown in the following figure. The events obtained from the event camera are divided into groups, and after motion compensation, clear event frames are formed. The images obtained from the color camera go through keyframe judgment and candidate point selection to predict and form the brightness increments. The event generative model is used to correlate measurements from events and images. The front-end predicts camera motion by minimizing the brightness increment error of both two kinds of measurements. The camera pose and velocities as well as the depth of sparse candidate points are refined by photometric bundle adjustment at the back-end to sustain the VO system’s good performance.

Performances

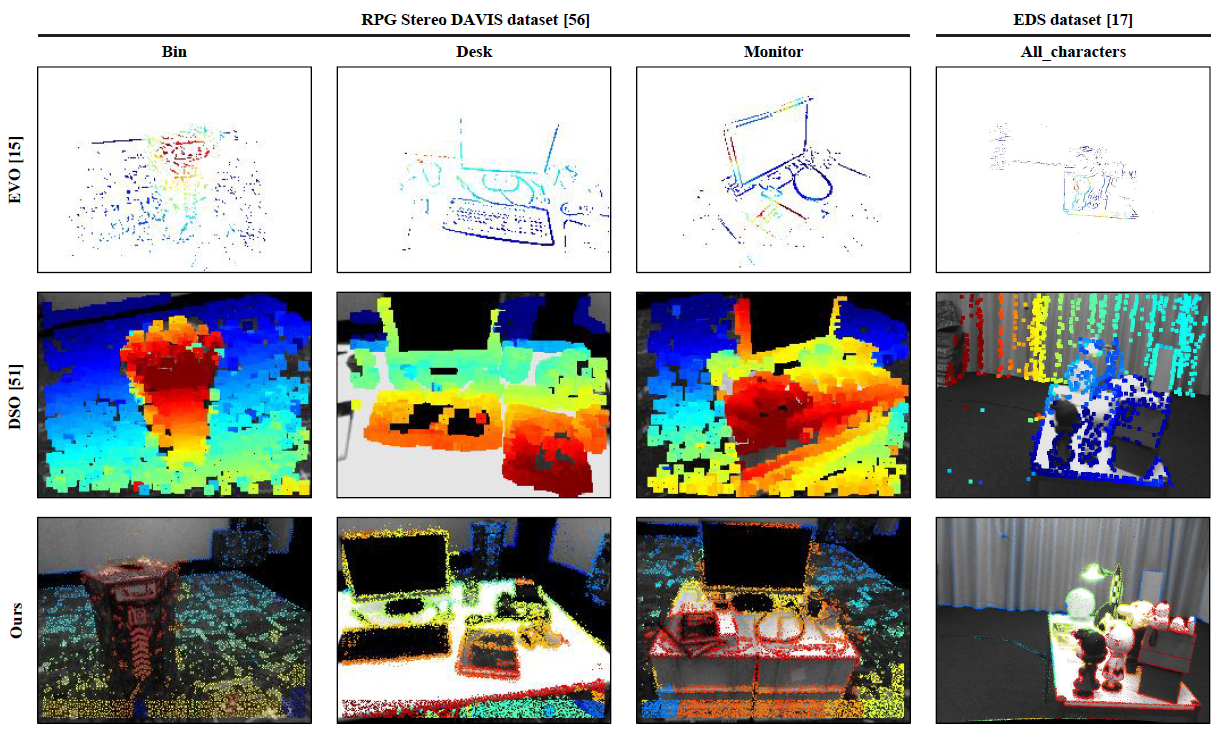

- Qualitative Results

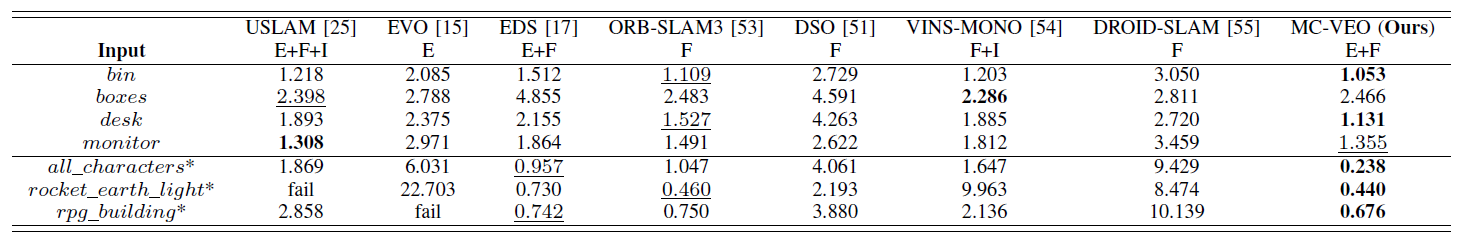

- Quantitative Results

Considering all metrics comprehensively, the performence of the MC-VEO is the best among all compared schemes.

Source Codes

Demo Videos

The following is the demo video demonstrating the performance of our MC-VEO in some typical sequences.