LiDAR-Inertial-Camera Calibration and SLAM (Tutor: Yunda SUN, wechat: syd17801034524)

1>Calibrate the extrinsic parameters of the LiDAR-Inertial-Camera sensor system.

2>Based on the calibrated extrinsic parameters, achieve tightly coupled LiDAR-Inertial-Camera SLAM.

1> The designed calibration module needs to support offline calibration without target.

|

|

3> The localization and mapping results should be stable, with no significant drift.

|

|

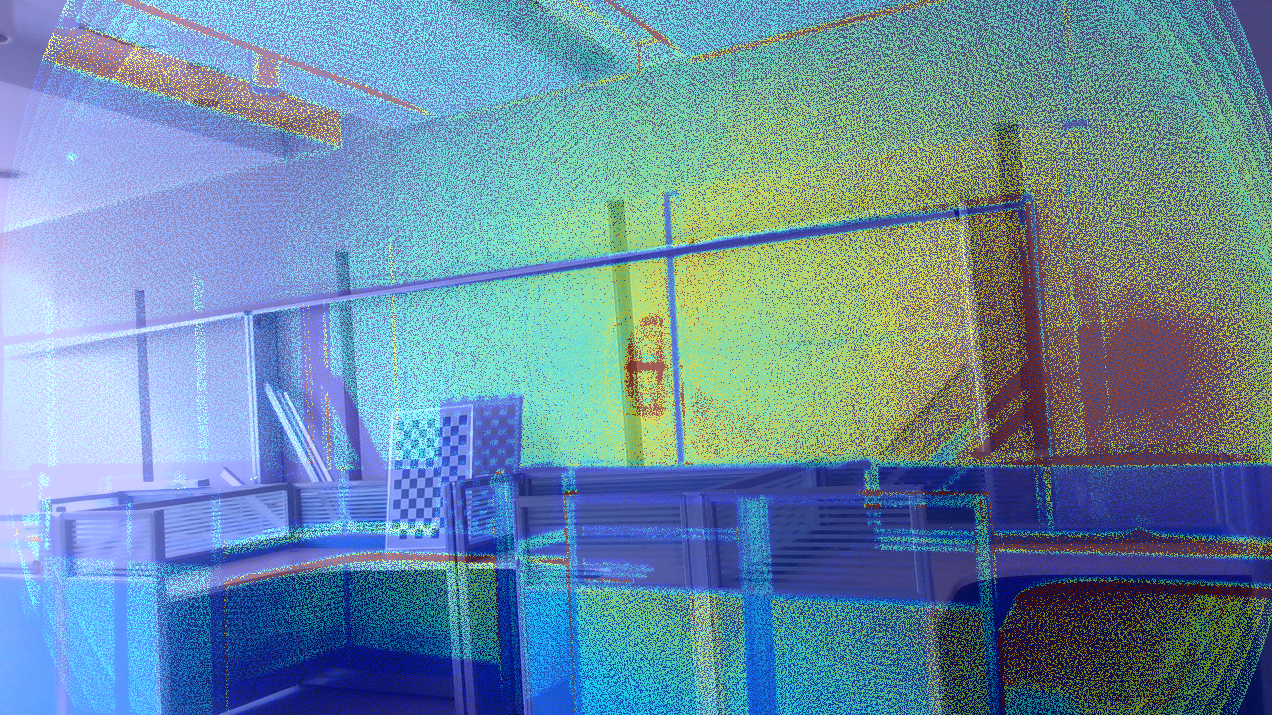

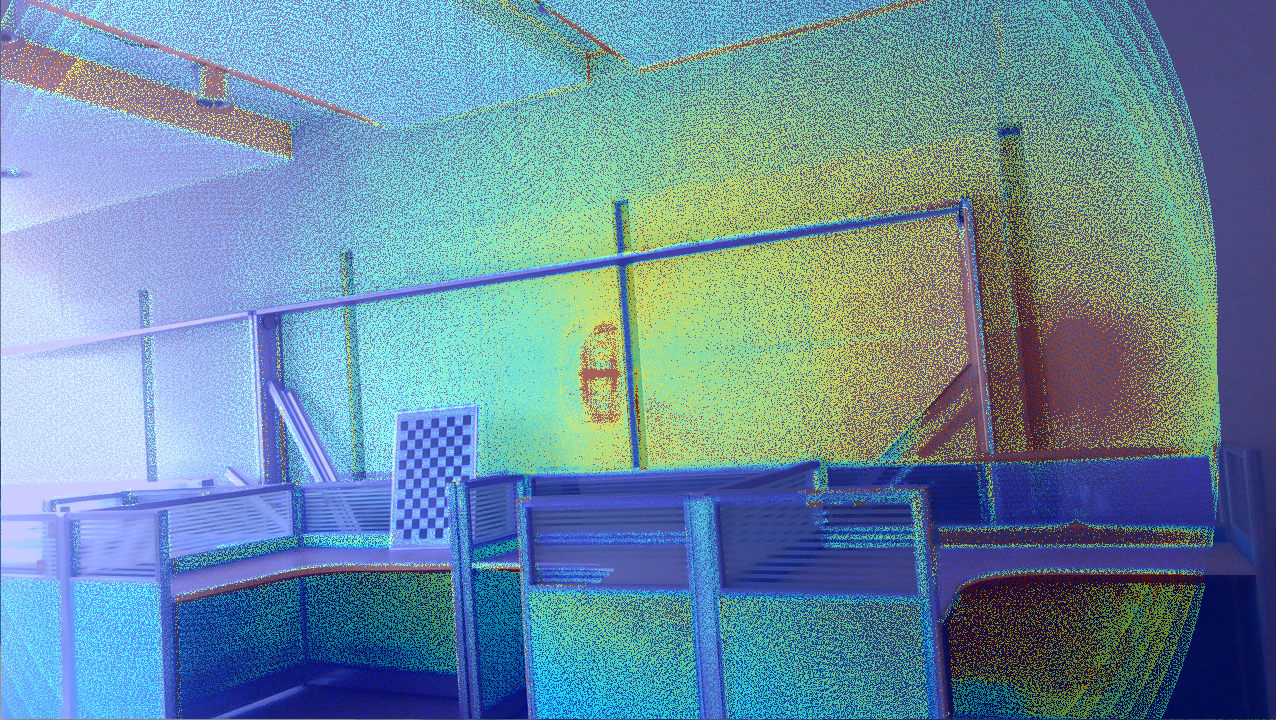

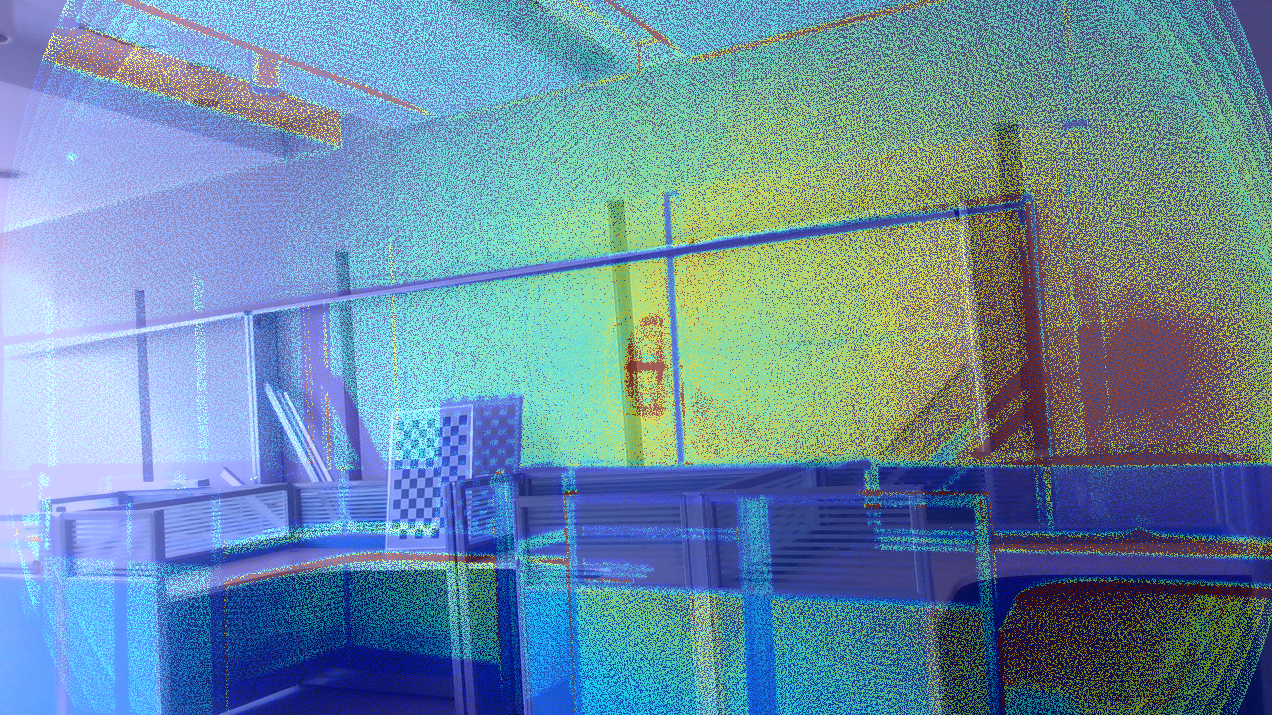

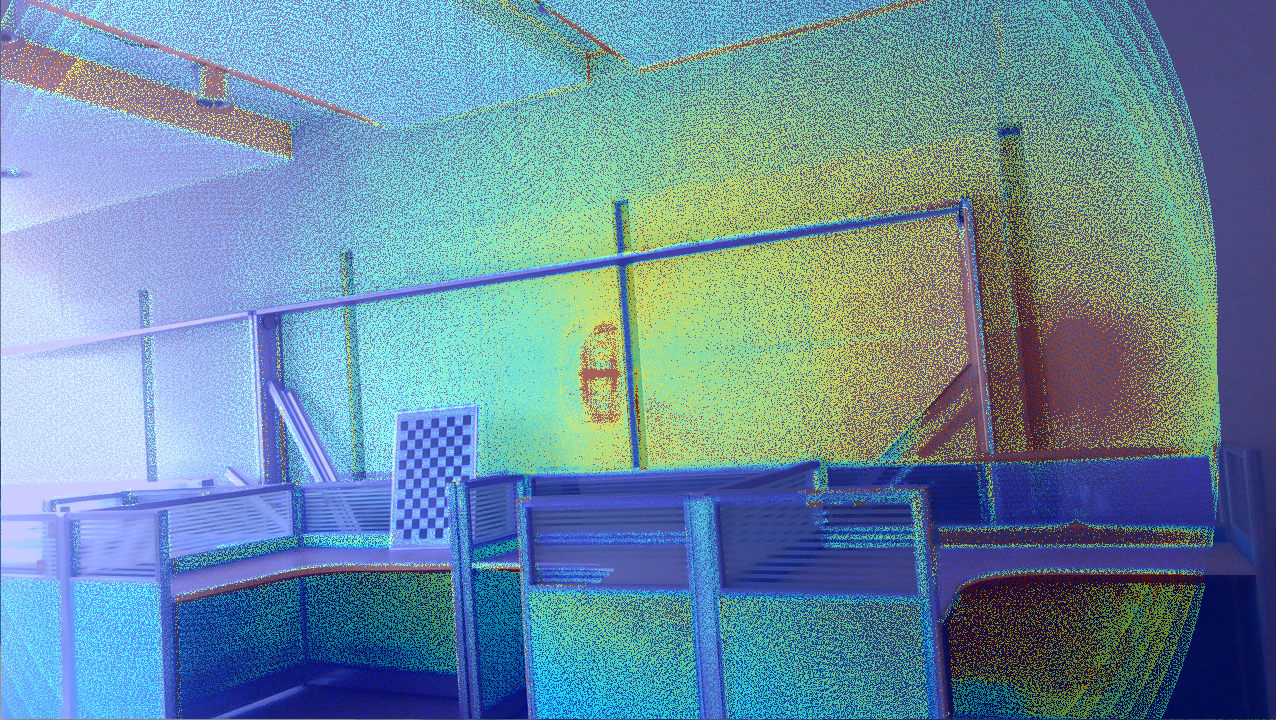

LiDAR-Camera calibration: Pixel-level Extrinsic Self Calibration of High Resolution LiDAR and Camera in Targetless Environments.

LiDAR-Inertial-Camera SLAM: A Robust, Real-time, RGB-colored, LiDAR-Inertial-Visual tightly-coupled state Estimation and mapping package.

Created on: Nov. 06, 2024