Monogenic-LBP: A New Approach for Rotation Invariant Texture Classification

Authors

- Lin Zhang, SSE, Tongji University, China

- Lei Zhang, Dept. Computing, HK Polytechnic University, Hong Kong

- Zhenhua Guo, Graduate School at Shenzhen, Tsinghua University, China

- David Zhang, Computing, HK Polytechnic University, Hong Kong

Abstract

Analysis of two-dimensional textures has many potential applications in computer vision. In this paper, we investigate the problem of rotation invariant texture classification, and propose a novel texture feature extractor, namely Monogenic-LBP (M-LBP). M-LBP integrates the traditional Local Binary Pattern (LBP) operator with the other two rotation invariant measures: the local phase and the local surface type computed by the 1st-order and 2nd-order Riesz transforms, respectively. The classification is based on the image’s histogram of M-LBP responses. Extensive experiments conducted on the CUReT database demonstrate the overall superiority of M-LBP over the other state-of-the-art methods evaluated.

Algorithm

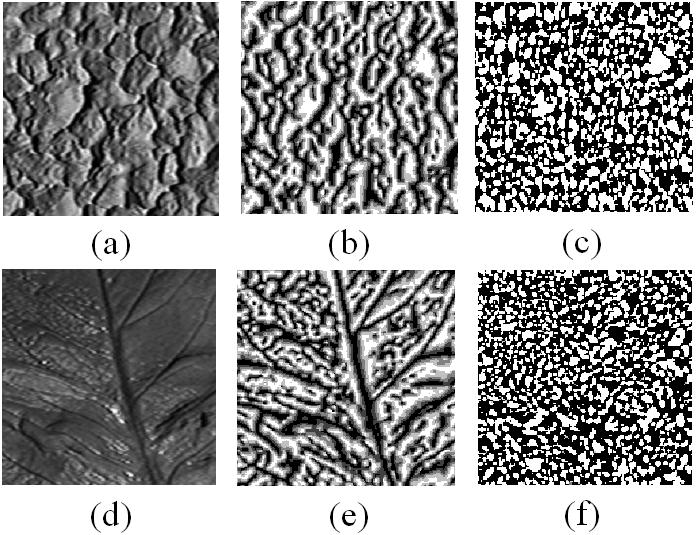

As one of the effective rotation invariant texture classification methods, LBP is widely used since it is simple yet powerful. However, LBP tends to oversimplify the local image structures. Thus, we want to find some other rotation invariant features to supplement LBP in order to improve the classification accuracy while preserving its simplicity. The local phase corresponds to a qualitative measure of a local structure (step, peak, etc) and it is a robust feature with respect to noise and illumination changes. We adopt the monogenic signal theory, which is an isotropic 2-D extension of the 1-D analytic signal, to extract the local image phase information in a rotation invariant way. Besides, we utilize the monogenic curvature tensor to extract the local surface type information, which is another rotation invariant metric. Then, we combine the uniform LBP, the local phase information and the local surface type information together as a novel texton feature, namely Monogenic-LBP (M-LBP). In real implementation, we adopt a multi-resolution analysis scheme by combining the information provided by multiple operators of varying parameters. The following figure shows the local phase and the local surface type (binarized) information extracted. (a) and (d) are two example texture images. (b) and (e) are the local phase. (c) and (f) are binarized local surface type.

At the classification stage, we use the chi-square statistics to measure the dissimilarity of sample and model histograms.

Validation

We conducted experiments on a modified CUReT database. It contains 61 textures and each texture has 92 images obtained under different viewpoints and illumination directions. We compared the performance of the proposed M-LBP method with the other three state-of-the-art rotation invariant texture classification methods, MR8, Joint, and the original LBP. We performed experiments on four different settings to simulate four situations:

1. T46A: The training set for each class was selected by taking one from every two adjacent images. Hence, there were 2,806 (61×46) models and 2,806 test samples. This setting was used to simulate the situation of large and comprehensive training set.

2. T23A: The training set for each class was selected by taking one from every four adjacent images. Hence, there were 1,403 (61×23) models and 4,209 (61×69) test samples. This setting was used to simulate the situation of small but comprehensive training set.

3. T46F: The training set for each class was selected as the first 46 images. Hence, there were 2,806 models and 2,806 test samples. This setting was used to simulate the situation of large but less comprehensive training set.

4. T23F: The training set for each class was selected as the first 23 images. Hence, there were 1,403 models and 4,209 test samples. This setting was used to simulate the situation of small and less comprehensive training set.

The classification accuracies and the feature sizes for the four evaluated methods are listed in Table 1. In addition, we also care about the classification speeds. At the classification stage, the histogram of the test image will be built at first and then it will be matched to all the models generated from the training samples. In Table 2, we list the time for one test histogram construction and for one matching at the classification stage by each method. All the algorithms were implemented with Matlab 7.4 except that a C++ implemented kd-tree (encapsulated in a MEX function) was used in MR8 and Joint to accelerate the labeling process. Experiments were performed on a Dell Inspiron 530s PC with Intel 6550 processor and 2GB RAM.

Table 1. Classification accuracies (%) and feature sizes

| Histogram Bins | T46A | T23A | T46F | T23F | |

|

LBP |

54 |

95.47 |

93.09 |

85.64 |

78.50 |

|

MR8 |

2440 |

97.65 |

96.15 |

88.70 |

77.83 |

|

Joint |

2440 |

97.36 |

95.37 |

87.13 |

78.55 |

| M-LBP | 540 | 97.86 | 96.72 | 89.67 | 81.21 |

Table 2. Time consumption (msec) at the classification stage

| Histogram construction | One matching | |

|

LBP |

87 |

0.022 |

|

MR8 |

4960 |

0.089 |

|

Joint |

13173 |

0.089 |

| M-LBP | 221 | 0.035 |

With Table 1 and Table 2, we evaluate the four schemes from three aspects: the classification accuracy, the feature size, and the runtime classification speed. First, with respect to the classification accuracy, M-LBP outperforms all the other 3 approaches under all the 4 experimental settings. Especially, it performs significantly better than the other ones in “T23F”. By contrast, although MR8 and Joint achieves similar performance with M-LBP in “T46A”, they perform much worse than M-LBP in “T23F”. This is because both MR8 and Joint require a training stage, which depends much on the training samples; when the training set is small and not comprehensive (as in “T23F”), the classification accuracy will drop largely. Thus, this indicates that the proposed M-LBP is more suitable for real applications where training samples are limited and not comprehensive. Second, M-LBP requires a moderate feature size, bigger than LBP but much smaller than MR8 and Joint. Although the feature size of M-LBP is bigger than LBP, considering the gain in the classification accuracy, it is deserved. Third, the four schemes have very different classification speeds. LBP and M-LBP work much faster than MR8 and Joint. In MR8 and Joint, in order to build the histogram of the test image, every pixel on the test image need to be labeled to one item in the texton dictionary, which is quite time consuming. Such a process is not required in LBP and M-LBP. Besides, an extra training period is needed in MR8 and Joint to build the texton dictionary, which is also not required in LBP and M-LBP. Thus, in general, the proposed M-LBP has the merits of high classification accuracy, small feature size and fast classification speed.

Reference

Lin Zhang, Lei Zhang, Zhenhua Guo, and David Zhang, "Monogenic-LBP: a new approach for rotation invariant texture classification", in: Proc. ICIP, pp. 2677-2680, 2010.