|

Vision-based Parking-slot Detection: A Benchmark and A Learning-based Approach Lin Zhang1,2, Junhao Huang1, Xiyuan Li1, Ying Shen1, and Dongqing Wang1 1School of Software Engineering, Tongji University, Shanghai, China 2Collaborative Innovation Center of Intelligent New Energy Vehicle, Tongji University, Shanghai, China |

Introduction

This is the website for our paper "Vision-based Parking-slot Detection: A Benchmark and A Learning-based Approach", submitted for review.

Recent years have witnessed a growing interest in developing automatic parking systems in the field of intelligent vehicle. However, how to effectively and efficiently locating parking-slots using a vision-based system is still an unresolved issue. In this paper, we attempt to fill this research gap to some extent and our contributions are twofold. Firstly, to facilitate the study of vision-based parking-slot detection, a large-scale parking-slot image database is established. For each image in this database, the marking-points and parking-slots are carefully labeled. Such a database can serve as a benchmark to design and validate parking-slot detection algorithms. Secondly, a learning based parking-slot detection approach is proposed. With this approach, given a test image, the marking-points will be detected at first and then the valid parking-slots can be inferred. Its efficacy and efficiency have been corroborated on our database.

Tongji Parking-slot Dataset 1.0

We have established a large-scale benchmark dataset, in which surround-view images were collected from typical underground and outdoor parking sites using low-cost fish-eye cameras. The parking-slots are defined by T-shaped or L-shaped marking-points. This dataset comprises two subsets, the training set and the testing set.

1. Training Set (training (positive samples).zip, training (negative samples).zip)

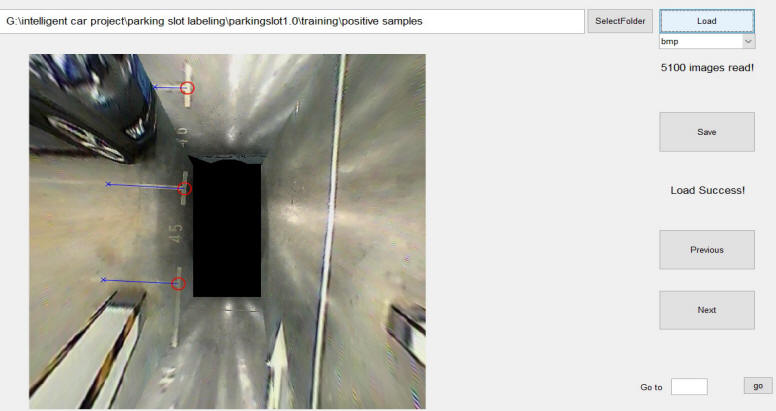

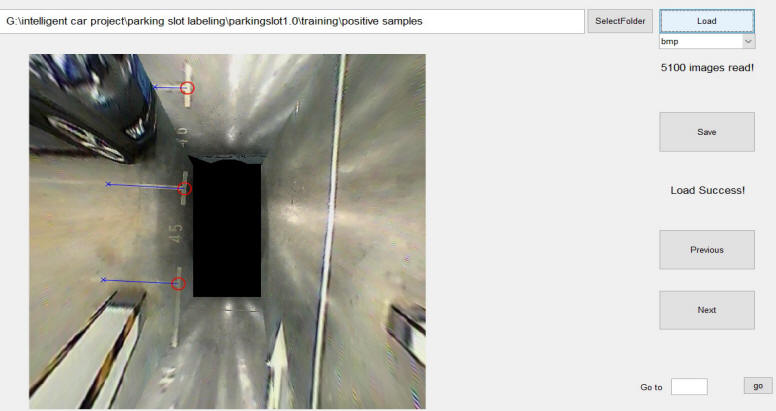

Unzip two ZIP files, "training (positive samples).zip" and "training (negative samples).zip". In "training (positive samples)" folder, there are 5100 images, and for each image the marking-points are labeled. The labeling information for a marking-point includes its position and its local orientation defined by the neighboring parking-line segments. You can view the labeling results using the Matlab tool developed by us as shown in the following figure, which can be downloaded here (viewMarkingPointsLabels.zip).

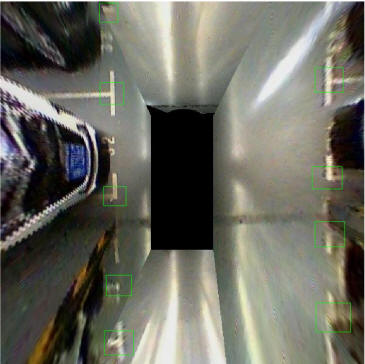

In the "training (negative samples)" folder, there are 2400 labeled images and for each image regions enclosing (potential) marking-points are marked. You can use our Matlab script (can be downloaded here) to view the annotations visually as shown in the following figure. At the training stage, the regions randomly sampled from these 2400 images which do not overlap with any marked regions can be used as negative training samples. Usually, this is done using a bootstrapping process.

2. Testing Set (ForMarkingPoints.zip, ForParkingSlots.zip)

Testing set has two parts, ForMarkingPoints and ForParkingSlots.

"ForMarkingPoints" contains 500 labeled images and is used for testing the accuracy of a marking-point detection algorithm. For each image, there is an associated mat file, containing the labeled marking-point information. We use the log-average missing rate as an objective metric to measure the accuracy of a marking-point detection algorithm. The Matlab source code to compute the log-average missing rate and plot the curve of missing rate against FPPI (false positive per image), as shown in the following figure, can be found here.

"ForParkingSlots" contains 500 labeled images and is used for testing the final accuracy of a parking-slot detection algorithm. The ground-truths for parking slots are stored in gt.mat. "gt.mat" actually is a CELL structure with 500 elements, the element i corresponding to parking-slots in image i. If one image has n parking-slots, the ith element is a n*9 matrix, each row corresponding to a parking-slot. The dimension of each row is 9 and their semantic meaning is [x1 y1 x2 y2 x3 y3 x4 y4 orientation]. P1=[x1 y1] and P2=[x2 y2] are the coordinates of the entrance-line points; [x3 y3] and [x4 y4] are the coordinates of the other two points of the parking-slot. Actually, [x3 y3] and [x4 y4] are estimated based on P1=[x1 y1] and P2=[x2 y2] and orientation using some prior knowledge about the size of the parking-slots. If the parking-slot is at the anticlockwise side of the vector P1P2, the orientation = 1, otherwise orientation = -1. Actually, currently, when matching the detected parking-slots with the ground-truths, [x3 y3] and [x4, y4] are not used; the matching is totally based on the entrance-lines and orientations. You can use our Matlab script (can be downloaded here) to visually view the ground-truth information. We have also provided the Matlab code to compute the Precision-Recall of the detection results against the ground-truth, which can be downloaded here.

Parking-slot Detection Algorithm: PSDL

We have proposed a learning-based approach, namely PSDL, for vision-based parking-slot detection. The matlab demo for our approach can be downloaded here, PSDL.zip.

List for downloadable files

1. Positive samples for training, training (positive samples).zip

2. Negative samples for training, training (negative samples).zip

3. Test set for testing marking-point detection performance, ForMarkingPoints.zip

4. Test set for testing parking-slot detection performance, ForParkingSlots.zip

5. Demo for our parking-slot detection algorithm PSDL, PSDL.zip.

6. Our Matlab tool used for labeling marking-points, viewMarkingPointsLabels.zip.

7. Matlab script to view the annotations for negative samples, viewAnnotation.m.

8. Matlab script to visually view the parking-slot ground-truth of the 500 test images, viewGroundTruth.m.

9. Matlab code to compute the Precision-Recall of the detection results against the ground-truth, precisionRecall.zip.

10. Code to compute the log-average missing rate of the marking-point detection algorithm and plot the curve of missing rate against FPPI, drawMRFPPICurve.zip.

Created on: Jan. 20, 2017

Last update: Jun. 16, 2017