|

Towards Contactless Palmprint Recognition: A Novel Device, A New Benchmark, and A Collaborative Representation Based Identification Approach Lin Zhang1,2, Lida Li1, Anqi Yang1, Ying Shen1, and Meng Yang3 1School of Software Engineering, Tongji University, Shanghai, China 2Collaborative Innovation Center of Intelligent New Energy Vehicle, Tongji University, China 3College of Computer Science and Software Engineering, Shenzhen University, Shenzhen, China |

Introduction

This is the website for our paper "Towards Contactless Palmprint Recognition: A Novel Device, A New Benchmark, and A Collaborative Representation Based Identification Approach" Pattern Recognition .

To make the palmprint-based recognition systems more user-friendly and sanitary, researchers have been investigating how to design such systems in a contactless manner. Though some efforts have been devoted, it is still not quite clear about the discriminant power of the contactless palmprint, mainly owing to lack of a public, large-scale, and high-quality benchmark dataset collected using a well-designed device. As an attempt to fill this gap, we have at first developed a highly user-friendly device for capturing high-quality contactless palmprint images. Then, a large-scale palmprint dataset is collected. For the first time, the quality of collected images is analyzed using modern image quality assessment metrics. Furthermore, for contactless palmprint identification, we have proposed a novel approach,namely CR CompCode, which can achieve high recognition accuracy while having an extremely low computational complexity.

Tongji Contactless Palmprint Dataset

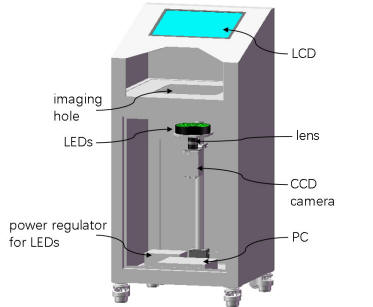

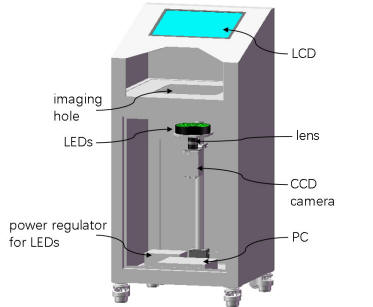

In order to investigate the discriminant capability of the contactless palmprint, we have at first devised and developed a novel contactless palmprint acquisition device, whose complete 3D CAD model is shown in Fig. 1(a). Its internal structure can be clearly observed from the 3D model shown in Fig. 1(b). Fig. 1(c) shows a photo of our device being used by a subject. The device's metal housing comprises two parts. Its lower part encloses a CCD camera (JAI AD-80 GE), a lens, a ring white LED light source, a power regulator for the light source, and an industrial computer. There is a square "imaging hole"' on the top cover of the housing's lower part, whose center is on the optical axis of the lens. The size of the hole is 230mm*230mm. The housing's upper part is wedge-shaped, on which a thin LCD with a touch screen is mounted. The height of our device is about 950mm and can be comfortably used by a normal adult. For data acquisition, the user needs to present his/her hand above the "imaging hole". The user can move his/her hand up and down freely within the space bounded by the housing's upper part and he/she can observe in real-time the camera's video stream from the LCD to adjust the hand's pose.

Fig.1 (a) Fig.1 (b)

Fig.1 (c)

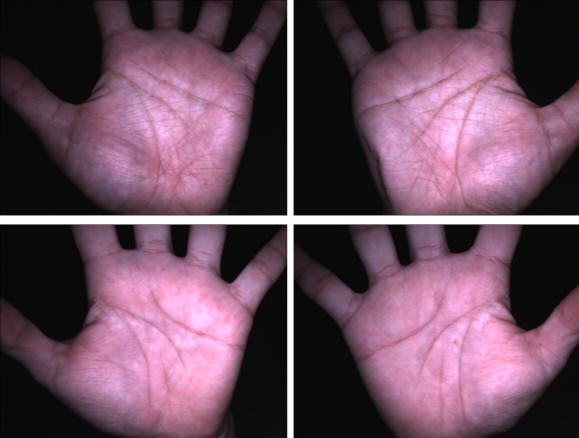

Our newly developed device has the following characteristics. At first, as it is of high user friendliness, the user will feel comfortable when using it. The whole design is in accordance with ergonomics and we pay much attention to details. For example, all LEDs are enclosed in the housing, preventing them from hurting the user's eyes. Moreover, an LCD is mounted on the top cover, from which the user can observe the camera's video stream in real time. That can guide the user to adjust the hand pose and also can help alleviate the user's anxiety when using our equipment. Secondly, as we carefully adjusted the configurations of the camera, the lens, and the light source, the acquired images are of high quality, meaning that they have high contrast and high signal-to-noise ratio. Four sample palmprint images collected using our device are shown in Fig. 2, from which it can be seen that even minute creases on the palmprints can be observed. Thirdly, with our device, the collected palmprint image can have a simple dark background because it is acquired against the dark back of the LCD. A simple background can largely reduce the complexity of the data processing afterwards and accordingly can improve the system's robustness.

Fig. 2

Using the developed device, we have established a large-scale contactless palmprint image dataset. As our device is straightforward to use, the only instruction given to the subject is that he/she needs to stretch his/her hand naturally to make sure the finger gaps can be clearly observed on the screen. In our dataset, images were collected from 300 volunteers, including 192 males and 108 females. Among them, 235 subjects were 20~30 years old and the others were 30~50 years old. We collected samples in two separate sessions. In each session, the subject was asked to provide 10 images for each palm. Therefore, 40 images from 2 palms were collected from each subject. In total, the database contains 12,000 images captured from 600 different palms. The average time interval between the first and the second sessions was about 61 days. The maximum and minimum time intervals were 106 days and 21 days, respectively.

The original palmprint dataset can be downloaded here. Original Images.

ROI images extracted using our algorithm are also released. ROI Images.

Naming convention. In the dataset, there are two folders, "session1" and "session2". "session1" contains images collected in the first session while "session2" comprises images collected in the second session. Two images in "session1" and "session2" having the same name were collected from the same palm. In each session, "00001~00010" were from the first palm, "00011~00020" were from the second palm, and so on. So, in each session, 6000 images were collected from 600 different palms. For each image in the "Original Images", you can find its corresponding ROI image in "ROI images".

Classification Method: CR_CompCode

For fast palmprint classification, we have proposed a method namly CR_CompCode, whose details can be found in our paper. On our dataset, its rank-1 recognition rate is 98.78%, and its time-cost for one identification operation (against a background dataset comprising 6000 images from 600 palms) is 12.48ms.

Source code for CR_CompCode can be downloaded here CR_CompCode

Created on: Jun. 09, 2016

Last update: Apr. 16, 2017