|

Research on Image Quality Assessment Lin Zhang, School of Software Engineering, Tongji University Lei Zhang, Dept. Computing, The Hong Kong Polytechnic University Key words: image quality assessment, IQA, FSIM, FSIMC, SSIM, VIF, MS-SSIM, IW-SSIM, IFC, PSNR, NQM, VSNR, SR_SIM, MAD, GSM, RFSIM |

Introduction

Image quality assessment (IQA) aims to use computational models to measure the image quality consistently with subjective evaluations. With the rapid proliferation of digital imaging and communication technologies, image quality assessment (IQA) has been becoming an important issue in numerous applications such as image acquisition, transmission, compression, restoration and enhancement, etc. Since the subjective IQA methods cannot be readily and routinely used for many scenarios, e.g. real-time and automated systems, it is necessary to develop objective IQA metrics to automatically and robustly measure the image quality. Meanwhile, it is anticipated that the evaluation results should be statistically consistent with those of the human observers. To this end, the scientific community has developed various IQA methods in the past decades. This website aims to provide enough basic knowledge of IQA to the beginners in this direction.

What are different categories of IQA problems?

According to the availability of a reference image, objective IQA metrics can be classified as full reference (FR), no-reference (NR) and reduced-reference (RR) methods.

Full Reference (FR) IQA In FR IQA problem, the distortion free image is given. Such an image usually is considered to have a "perfect" quality and is called "reference image" in IQA terminologies. Then, a set of its distorted versions are also provided. Your task is to present a computerized algorithm to evaluate the perceptual quality of each distorted image.

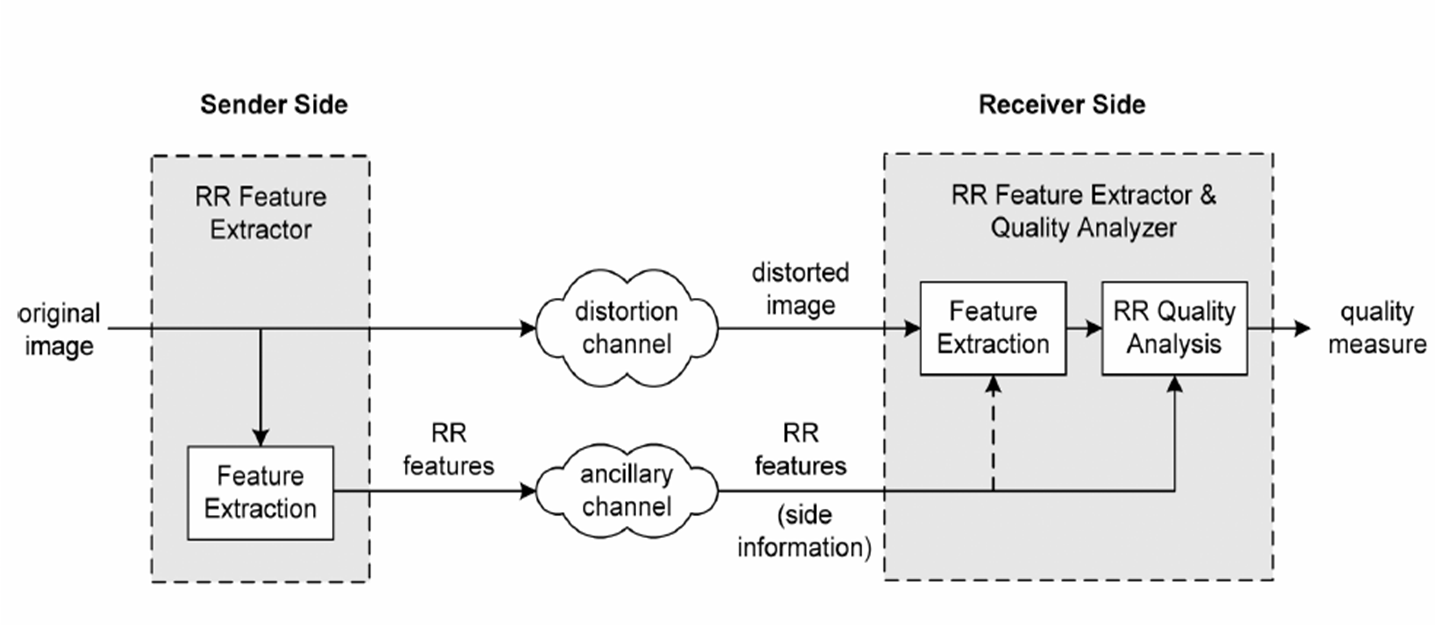

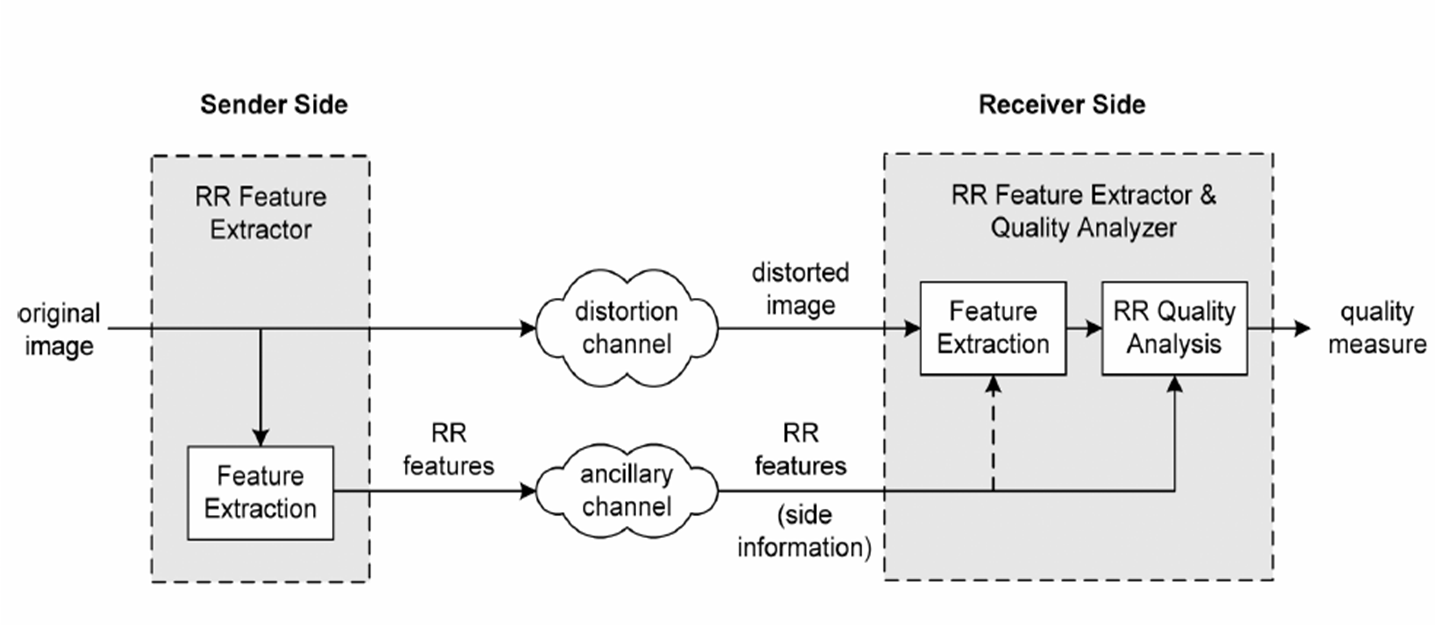

Reduced Reference (RR) IQA In RR IQA problem, the distorted image is of course given. The reference image is not available; however, partial information of the reference image is known. What kind of information is known of the reference image depends on your algorithm's scheme. Such RR algorithms can be useful in applications such as real-time visual information communications over wired or wireless networks, where they can be adopted to monitor image quality degradations or control the network streaming resources. The general framework explaining how an RR IQA metric works can be illustrated by the following figure, which is extracted from the paper "Q. Li, Z. Wang, Reduced-reference image quality assessment using divisive normalization-based image representation, IEEE Trans. Image Processing, vol. 3, no. 2, pp. 202-211, 2009".

No Reference (NR) IQA In NR IQA problem, only the distorted image is given. Or more accurately in such a case, we cannot call it as "distorted" image since we do not know the corresponding distortion-free reference image. You need to design an algorithm to evaluate the quality of the given image. NR is the most difficult IQA problems. To the best of my knowledge, there is no successful universal NR IQA algorithms currently. Most of the existing NR IQA metrics assume that the distortion affecting the image is known beforehand. For example, there exist NR IQA metrics to evaluate the quality of JPEG, JPEG2000, or blurred images.

How to evaluate IQA metrics?

If you want to evaluate the performance of existing IQA metrics or to compare your proposed IQA metric with the existed ones, you need to conduct experiments on the public available image databases established for the purpose of evaluating IQA metrics. Normally, in such a database, there are a dozen of reference images; for each image, there are several distorted versions corresponding to several kinds of different distortion types. For each distorted image, there are several subjective evaluations by human beings. And a final subjective score is assigned to each distorted image according to some rules. In order to evaluate an IQA metric, you need to compute objective scores for all the distorted images in the database using the selected IQA metric. Then, you can evaluate kinds of correlations (and RMSE) between the obtained objective scores and the subjective scores provided by the database. By such a process, the performance of IQA metrics can be compared.

Currently, to the best of my knowledge, there are seven publicly available image databases in the IQA community, including TID2013 database, TID2008 database, CSIQ database, LIVE database, IVC database, Toyama-MICT database, Cornell A57 database, and Wireless Imaging Quality database (WIQ). However, these seven databases are quite different in terms of the number of reference images, the number of distorted images, the number of quality distortion types, the number of human observers, and the format of images (grayscale or colorful). In my opinion, TID2008, CSIQ, and LIVE are the most comprehensive ones. The characteristics of these seven databases are summarized in the following table.

|

Database |

Source Images |

Distorted Images | Distortion Types | Image Type | Observers |

|

TID2013 |

25 | 3000 | 24 | color | 971 |

|

TID2008 |

25 | 1700 | 17 | color | 838 |

|

CSIQ |

30 | 866 | 6 | color | 35 |

|

LIVE |

29 | 779 | 5 | color | 161 |

|

IVC |

10 | 185 | 4 | color | 15 |

|

Toyama-MICT |

14 | 168 | 2 | color | 16 |

|

A57 |

3 | 54 | 6 | gray | 7 |

|

WIQ |

7 | 80 | 5 | gray | 60 |

Suppose that you have already calculated objective scores for all the distorted images in a database with a selected IQA metric, now you can analyze its performance. In the IQA community, there are four widely used metrics to evaluate the performance of IQA metrics. In the following, n denotes the number of distorted images in the database.

The first two ones are the Spearman rank order correlation coefficient (SROCC) and the Kendall rank order correlation coefficient. These two can measure the prediction monotonicity of an IQA metric since they operate only on the rank of the data points and ignore the relative distance between data points. SROCC is defined as:

where di is the difference between the ith image's ranks in the subjective and objective evaluations. KROCC is defined as:

where nc is the number of concordant pairs in the data set and nd is the number of discordant pairs in the data set. Let (x1, y1), (x2, y2), ¡, (xn, yn) be a set of joint observations from two random variables X and Y respectively, such that all the values of (xi) and (yi) are unique. Any pair of observations (xi, yi) and (xj, yj) are said to be concordant if the ranks for both elements agree: that is, if both xi > xj and yi > yj or if both xi < xj and yi < yj. They are said to be discordant, if xi > xj and yi < yj or if xi < xj and yi > yj. If xi = xj or yi = yj, the pair is neither concordant, nor discordant. With the help of Matlab, it is easy to compute the Spearman or Kendall rank order correlations for two vectors (one vector holds the subjective scores, and the other contains the corresponding objective scores) by the following Matlab script:

SROCC_Corr_Coef = corr(subjetiveScores, objectiveScores, 'type', 'spearman');

KROCC_Corr_Coef = corr(subjetiveScores, objectiveScores, 'type', 'kendall');

¡¡

In the following, we suppose that si is the subjective score of the i-th image and xi is the objective score of the i-th image. To compute the other two evaluation metrics we need to apply a regression analysis, to provide a nonlinear mapping between predicted objective scores and the subjective mean opinion scores (MOS). The third metric is the Pearson linear correlation coefficient (PLCC) between MOS and the objective scores after nonlinear regression. The fourth metric is the root mean squared error (RMSE) between MOS and the objective scores after nonlinear regression. For the nonlinear regression, the following mapping function is widely used, which is proposed in "H.R. Sheikh et al., A statistical evaluation of recent full reference image quality assessment algorithms, IEEE Trans. Image Processing, vol. 15, no. 11, pp. 3440-3451, 2006". We first map xi to qi by the following function:

where ¦Âi are parameters to be fitted. Then, the PLCC can be computed as

Of course, such a Pearson linear correlation coefficient can be conveniently computed by using the following Matlab script:

PLCC = corr(subjectiveScores, nonLinearMappedObjectiveScores, 'type', 'Pearson');

The fourth metric RMSE can be simply defined as

¡¡

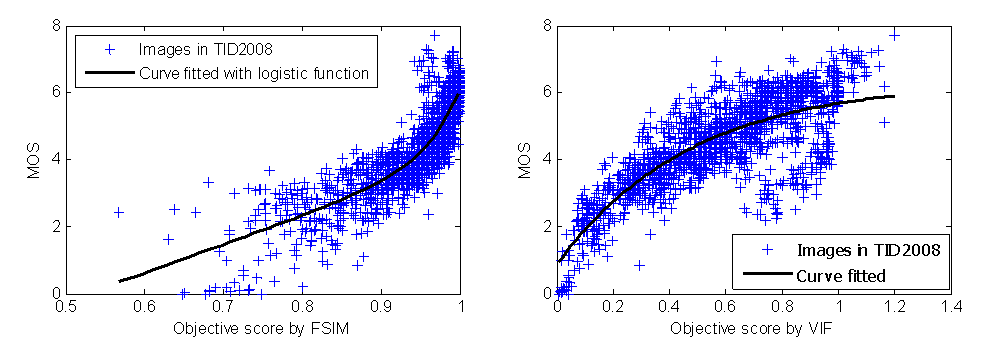

In addition to the four evaluation metrics defined above, usually researchers plot the distributions of subjective scores and objective scores on a 2-D graph and also plot the fitted curve on the same figure. The following figure is such an example, which shows the scatter distributions of subjective MOS versus the predicted scores obtained by the FSIM IQA metric and the VIF IQA metric on TID2008 database.

What are the performances of modern FR IQA metrics?

In the past decade, a dozen of FR IQA metrics have been proposed; however, there is few papers giving a systematic evaluation of all the popular IQA metrics on all the available datasets. There is because new IQA metrics and new testing datasets are continuously emerging. Sometimes, different researchers reported different evaluation results even for the same IQA metric on the same dataset; because they used different or even wrong implementations. Another fact is that in order to compute PLCC and RMSE, researchers need to perform a non-linear fitting to the result; however, people sometimes use different fitting functions. And even with the same fitting function, the fitting results can also be different because of the different parameter initialization. Considering these situations, here we want to give a thoroughly evaluation of all the popular IQA metrics known by us on all the available datasets. All the experimental settings, including the source code used, the fitting function used, the parameter initialization for the fitting function, and way to generate the scatter plot, will be described in detail. We will keep updating materials provided here.

4. Evaluation of Multiscale-SSIM.

11. Evaluation of UQI.

12. Evaluation of GSM.

13. Evaluation of MAD.

14. Evaluation of SR-SIM.

15. Evaluation of RFSIM.

Our related works

Lin Zhang, Ying Shen, and Hongyu Li, "VSI: A visual saliency induced index for perceptual image quality assessment", IEEE Trans. Image Processing, vol. 23, no. 10, pp. 4270-4281, 2014. (website and source code)

Lin Zhang and Hongyu Li, "SR-SIM: A fast and high performance IQA index based on spectral residual", in Proc. ICIP, pp. 1473-1476, 2012. (website and source code)

Lin Zhang, Lei Zhang, Xuanqin Mou, and David Zhang, "A comprehensive evaluation of full reference image quality assessment algorithms", in Proc. ICIP, pp. 1477-1480, 2012.

Lin Zhang, Lei Zhang, Xuanqin Mou, and David Zhang, "FSIM: A feature similarity index for image quality assessment", IEEE Trans. Image Processing, vol. 20, no. 8, pp. 2378-2386, 2011. (website and source code)

Lin Zhang, Lei Zhang, and Xuanqin Mou, "RFSIM: A feature based image quality assessment metric using Riesz transforms", in: Proc. ICIP, pp. 321-324, 2010. (website and source code)

¡¡

Created on: Jan.18, 2011

Last update: Aug. 30, 2014