|

SDSP: A Novel Saliency Detection Method by Combining Simple Priors Lin Zhang, Zhongyi Gu, and Hongyu Li School of Software Engineering, Tongji University, Shanghai |

Introduction

This is the website for our paper "SDSP: A Novel Saliency Detection Method by Combining Simple Priors", in Proc. ICIP, 2013.

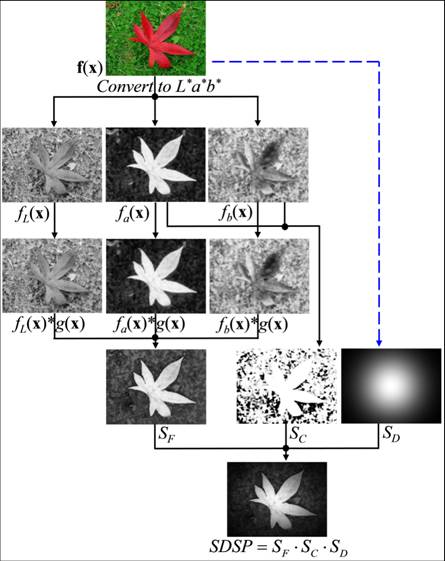

Salient regions detection from images is an important and fundamental research problem in neuroscience and psychology and it serves as an indispensible step for numerous machine vision tasks. In this paper, we propose a novel conceptually simple salient region detection method, namely SDSP, by combining three simple priors. At first, the behavior that the human visual system detects salient objects in a visual scene can be well modeled by band-pass filtering. Secondly, people are more likely to pay their attention on the center of an image. Thirdly, warm colors are more attractive to people than cold colors are. Extensive experiments conducted on the benchmark dataset indicate that SDSP could outperform the other state-of-the-art algorithms by yielding higher saliency prediction accuracy. Moreover, SDSP has a quite low computational complexity, rendering it an outstanding candidate for time critical applications. The following figure shows the flowchart for SDSP computation for a given image.

Illustration for the computation process of SDSP.

Source Code

The source code can be downloaded here: SDSP.m.

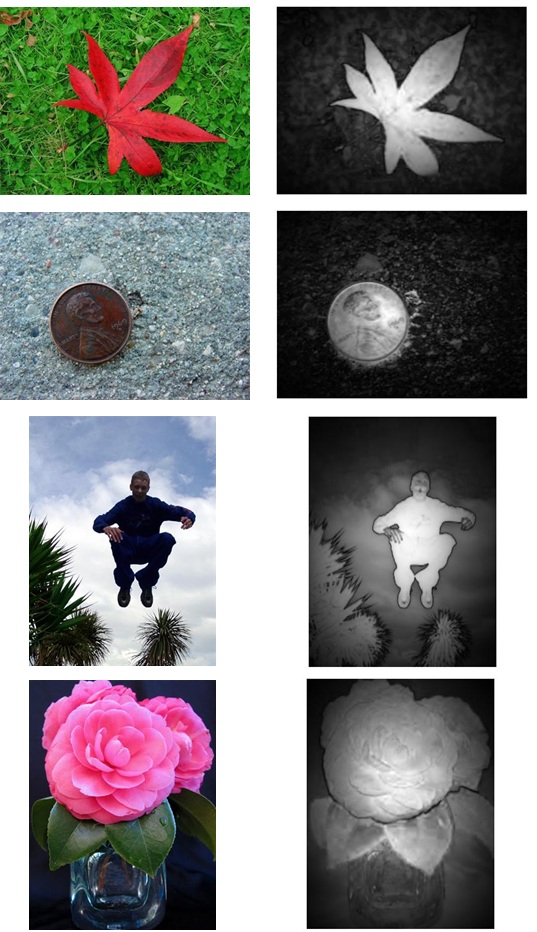

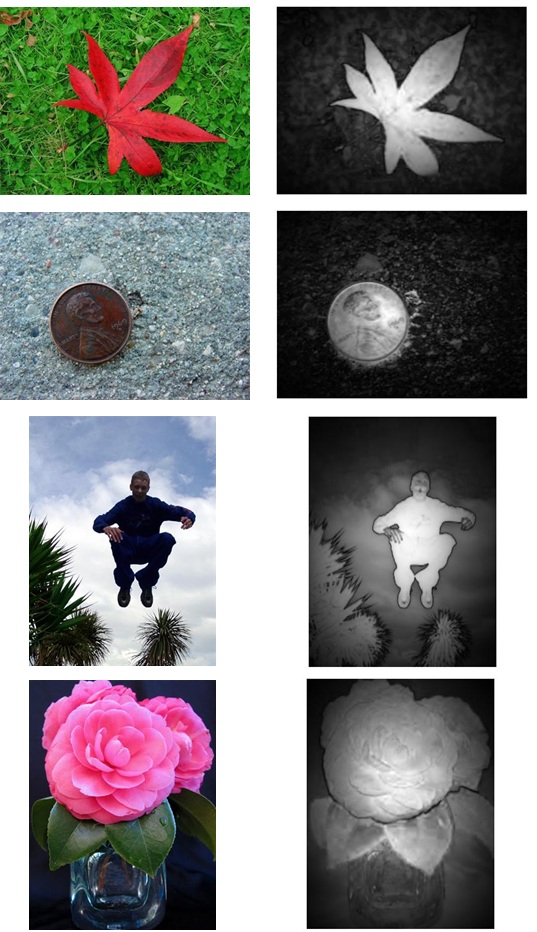

Sample Results

Saliency detection results obtained by using our proposed algorithm SDSP are shown in the above figure. Left column shows original images while the right column shows their corresponding saliency maps.

Evaluation Results

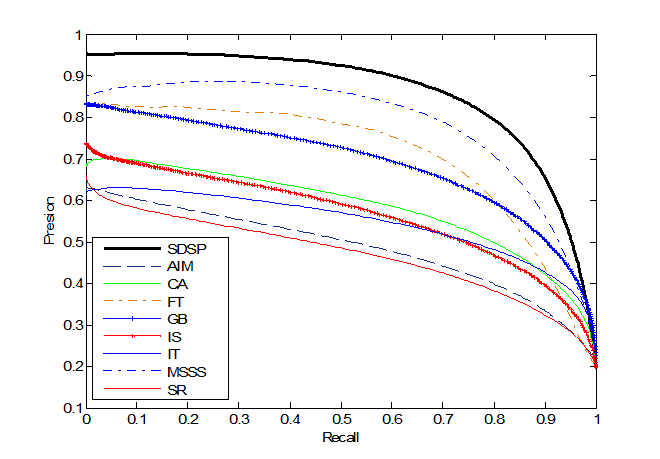

We exhaustively compared our approach SDSP to eight state-of-the-art other saliency detection methods on a publicly available dataset [1] comprising 1000 images with binary ground truth. The methods used for comparison included AIM [2], CA [3], FT [1], GB [4], IS [5], IT [6], MSSS [7], and SR [8].

Experiment 1.

We evaluated the performance of a saliency detection algorithm in the context of salient object segmentation. For a given saliency map with values in the range [0, 255], the simplest way to get a binary segmentation of the salient object is to threshold the saliency map at a threshold Tf¡Ê[0, 255]. When Tf varies from 0 to 255, different precision-recall pairs are obtained, and a precision-recall curve can be drawn. The average precision-recall curve is generated by averaging the results from all the 1000 test images. The resulting curves are shown in the following figure.

Experiment 2.

In this experiment, we used an image dependent adaptive threshold to segment objects in the image. Specifically, such an adaptive threshold Ta is determined as twice the mean saliency of the image. Using the adaptive threshold, we could obtain binarized maps of salient objects extracted by each of the saliency detection algorithm. Then, for each algorithm, for each image, we can compute the F-measure, which is defined as

F = (1+¦Â2)*Precision*Recall / (¦Â2*Precision + Recall)

we set ¦Â2 = 0.3 in our experiments. F-measure can reflect the overall prediction accuracy of an algorithm. Averaged F-measure over 1000 images achieved by each saliency detection algorithm is listed in the following table.

|

Method |

F-Measure |

|

AIM |

0.4317 |

|

CA |

0.5528 |

|

FT |

0.6700 |

|

GB |

0.6186 |

| IS | 0.5020 |

| IT | 0.4959 |

| MSSS | 0.7417 |

| SR | 0.4568 |

|

SDSP |

0.7758 |

Experiment 3.

In addition to the saliency prediction accuracy, the computational costs of various methods were also evaluated. Experiments were performed on a standard HP Z620 workstation with a 3.2GHZ Intel Xeon E5-1650 CPU and an 8G RAM. The software platform was Matlab R2012a. The time cost consumed by each evaluated saliency detection method for processing one 400¡Á300 color image is listed in the following table.

|

Method |

Time cost (seconds) |

|

AIM |

5.118 |

|

CA |

33.662 |

|

FT |

0.045 |

|

GB |

0.464 |

| IS | 0.022 |

| IT | 0.134 |

| MSSS | 0.784 |

| SR | 0.013 |

|

SDSP |

0.039 |

Reference

[1] R. Achanta, S. Hemami, F. Estrada, and S. Susstrunk, ¡°Frequency-tuned salient region detection,¡± CVPR¡¯09, pp. 1597-1604, 2009.

[2] N. Bruce and J. Tsotsos, ¡°Saliency based on information maximization,¡± Adv. Neural Information Process. Syst., vol. 18, pp. 155-162, 2006.

[3] S. Goferman, L. Zelnik-Manor, and A. Tal, ¡°Context-aware saliency detection,¡± CVPR¡¯10, pp. 2376-2383, 2010.

[4] J. Harel, C. Koch, and P. Perona, ¡°Graph-based visual saliency,¡± Adv. Neural Information Process. Syst., vol. 19, pp. 545-552, 2007.

[5] X. Hou and L. Zhang, ¡°Saliency detection: a spectral residual approach,¡± CVPR¡¯07, pp. 1-8, 2007.

[6] L. Itti, C. Koch, and E. Niebur, ¡°A model of saliency-based visual attention for rapid scene analysis,¡± IEEE Trans. PAMI, vol. 20, pp. 1254-1259, 1998.

[7] R. Achanta and S. Susstrunk, ¡°Saliency detection using maximum symmetric surround,¡± ICIP¡¯10, pp. 2653-2656, 2010.

[8] X. Hou, J. Harel, and C. Koch, ¡°Image signature: highlighting sparse salient regions,¡± IEEE Trans. PAMI, vol. 34, pp. 194-201, 2012.

Created on: Jan. 02, 2013

Last update: May. 05, 2013